GCPR VMV | 2020

DAGM German Conference on Pattern Recognition, Tübingen

Attending DAGM GCPR | VMV | VCBM 2020 is easy:

Follow the YouTube streams and ask questions in the Discord chat room of the corresponding session!

YouTube live streams | Discord server

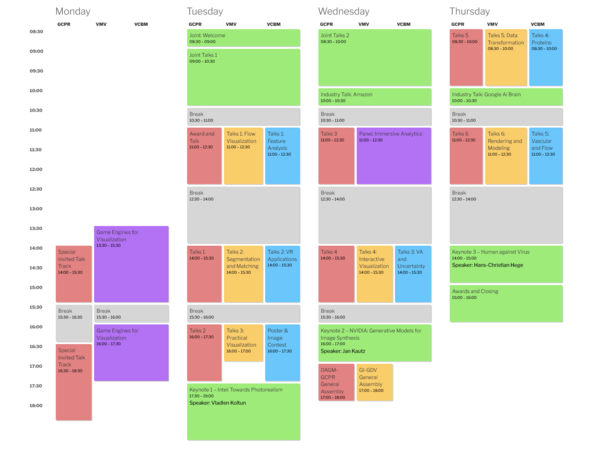

Detailed program

Monday

13:30–17:30 Tutorial: Game Engines for Visualization

Chair: Michael Krone

13:30-14:00

In recent years, games engines like Unreal Engine and Unity have gained attention as a foundation for data visualization in academic research, especially since they can be used freely for personal projects. In addition, the creators of these engines are also extending their use in industrial applications beyond entertainment. While using such an engine of course requires additional knowledge or training, the benefits are that it offers a stable software environment that already provides the developers with a lot of functionality (e.g., rendering capabilities, user interaction, or cross-platform usability). In this half-day tutorial, two popular game engines (Unreal Engine and Unity 3D) will be introduced with respect to their potential for data visualization.

14:00-15:30

Unreal Engine, by Epic Games, has evolved over the last two decades and is a proven high-end game engine for delivering AAA gaming titles. In recent years Epic has made significant strides into non-game applications, such as Architectural Visualization, Product Visualization, Mixed Reality Live Broadcasting, and Virtual Production in the movie industry.

In this part to the tutorial, we will give an overview of Unreal Engine then dive into the Unreal Editor and explore how it can be used for Data Visualization. We will have a look at different aspects, such as Materials, Blueprints, coding in C++, and Niagara, the visual effects plugin, by reviewing and exploring some examples.

16:00-17:30

Unity 3D is currently the most used game engine thanks to the support of a large choice of platforms and a relative ease of use compared to its competitors. Recent initiatives by Unity technologies provide high-end performance (C# burst compiler) and customizable rendering pipelines to extend Unity capabilities and get the most out of the game engine. These changes make Unity even more relevant for scientific visualization in a game engine.

In this part of the tutorial, we will tackle a classical visualization problem: rendering a large amount of spheres. We will take a look at different implementations to render objects using Unity and go through several optimization processes. This journey will be an opportunity to learn about coding and rendering in Unity in a visualization-oriented tutorial.

14:00–18:30 Special Invited Talk Track

Chair: Andreas Geiger, Torsten Sattler

14:00-14:30

How can we teach machines to see the world like humans? Taking inspiration from the ventral pathway in the visual brain, convolutional neural networks (CNNs) have become a key tool for solving computer vision problems—often reaching human-level performance on benchmark tasks like object recognition or detection. Despite these successes perceptual decision making and generalization in machines is still very different from humans. In this talk, I will present ongoing work of my lab to better understand these differences between human vision and CNNs studying constrained architectures, adversarial testing, and out-of-domain generalization.

14:30-15:00

While the quality of GAN image synthesis has tremendously improved in recent years, our ability to control and condition the output is still limited. Focusing on StyleGAN, we introduce a simple and effective method for making local, semantically-aware edits to a target output image. This is accomplished by borrowing elements from a source image, also a GAN output, via a novel manipulation of style vectors. Our method requires neither supervision from an external model, nor involves complex spatial morphing operations. Instead, it relies on the emergent disentanglement of semantic objects that is learned by StyleGAN and StyleGAN 2 during its training. Semantic editing is demonstrated on GANs producing human faces, indoor scenes, cats, and cars. We measure the locality and photorealism of the edits produced by our method, and find that it accomplishes both. This is joint work with Edo Collins, now at Google, and Raja Bala and Bob Price from PARC.

15:00-15:30

I will present three recent projects within the 3D Deep Learning research line from my team at Google Research: (1) A neural network model for reconstructing the 3D shape of multiple objects appearing in a single RGB image (ECCV’20). (2) A new conditioning scheme for normalizing flow models. It enables several applications such as reconstructing an object’s 3D point cloud from an image, or the converse problem of rendering an image given a 3D point cloud (CVPR’20). (3) A neural rendering framework that maps a voxelized object into a high quality image. It renders highly textured objects and illumination effects such as reflections and shadows realistically. It allows controllable rendering: geometric and appearance modifications in the input are accurately represented in the final rendering (CVPR’20).

16:30-17:00

Computer Vision has been revolutionized by Machine Learning and in particular Deep Learning. For many problems which have been studied for decades, state-of-the-art performance has dramatically improved by using artificial neural networks. However, these methods come with their own challenges concerning robustness and security. In this talk I will summarize some of our recent efforts in this space. E.g., while context information is essential for best performance, it might lead to overconfident or even wrong predictions of our methods. Also, I will discuss new insights about reverse engineering deep neural networks as well as stealing the entire functionality of them cheaply. While we are clearly at the infancy of understanding robustness and security implications of deep neural networks, the talk aims to raise awareness as well as to motivate more researchers to address these important challenges.

17:00-17:30

High fidelity digital 3D environments have been proposed in recent years, however, it remains extreme challenging to automatically populate such environment with realistic human bodies. Existing work utilizes images, depths or semantic maps to represent the scene, and parametric human models to represent 3D bodies in the scene. While being straightforward, their generated human-scene interactions are often lack of naturalness and physical plausibility. Our key observation is that humans interact with the world through body scene contact. To explicitly and effectively represent the physical contact between the body and the world is essential for modeling human-scene interaction. To that end, we propose a novel interaction representation, which explicitly encodes the proximity between the human body and the 3D scene around it. Specifically, given a set of basis points on a scene mesh, we leverage a conditional variational autoencoder to synthesize the distance from every basis point to its closest point on a human body. The synthesized proximal relationship between human body and the scene can indicate which region a person tends to contact. Furthermore, based on such synthesized proximity, we are able to effectively obtain expressive 3D human bodies that interact with the 3D scene naturally. Our perceptual study shows that our model significantly improves the state-of-the-art method, approaching the realism of real human-scene interaction. We believe our method makes an important step towards the fully automatic synthesis of realistic 3D human bodies in 3D scenes.

17:30-18:00

We study the problem of learning from multiple untrusted data sources, a scenario of increasing practical relevance given the recent emergence of crowdsourcing and collaborative learning paradigms. Specifically, we analyze the situation in which a learning system obtains datasets from multiple sources, some of which might be biased or even adversarially perturbed. It is known that in the single-source case, an adversary with the power to corrupt a fixed fraction of the training data can prevent “learnability”, that is, even in the limit of infinitely much training data, no learning system can approach the optimal test error. I present recent work with Nikola Konstantinov in which we show that, surprisingly, the same is not true in the multi source setting, where the adversary can arbitrarily corrupt a fixed fraction of the data sources.

18:00-18:30

In the past three years we witnessed the rise of micro drones with weight ranging from 30g up to 500g performing autonomous agile maneuvers with onboard sensing and computation. In this talk, I will summarize the key scientific and technological achievements and the next challenges.

Tuesday

JOINT

08:30–09:00 Welcome

Chair: Andreas Geiger, Hendrik Lensch, Michael Krone, Kay Nieselt

JOINT

09:00–10:30 Joint Talks 1

Chair: Hendrik Lensch

09:00-09:15

Deep Affine Normalizing Flows are efficient and powerfulmodels for high-dimensional density estimation and sample generation.Yet little is known about how they succeed in approximating complexdistributions, given the seemingly limited expressiveness of individualaffine layers. In this work, we take a first step towards theoretical un-derstanding by analyzing the behaviour of asingleaffine coupling layerunder maximum likelihood loss. We show that such a layer estimates andnormalizes conditional moments of the data distribution, and derive atight lower bound on the loss depending on the orthogonal transforma-tion of the data before the affine coupling. This bound can be used toidentify the optimal orthogonal transform, yielding a layer-wise trainingalgorithm for deep affine flows. Toy examples confirm our findings andstimulate further research by highlighting the remaining gap betweenlayer-wise and end-to-end training of deep affine flows.

09:15-09:30

Today’s deep learning systems deliver high performance based on end-to-end training but are notoriously hard to inspect. We argue that there are at least two reasons making inspectability challenging: (i) representations are distributed across hundreds of channels and (ii) a unifying metric quantifying inspectability is lacking. In this paper, we address both issues by proposing Semantic Bottleneck (SB) layers, integrated into pretrained networks, to align channel outputs with individual visual concepts and introduce the model agnostic AUiC metric to measure the alignment. We present a case study on semantic segmentation to demonstrate that SBs improve the AUiC up to four-fold over regular network outputs. We explore two types of SB-layers in this work: while concept-supervised SB-layers (SSB) offer the greatest inspectability, we show that the second type, unsupervised SBs (USB), can match the SSBs by producing one-hot encodings. Importantly, for both SB types, we can recover state of the art segmentation performance despite a drastic dimensionality reduction from 1000s of non aligned channels to 10s of semantics-aligned channels that all downstream results are based on.

09:30-10:00

We present a compact and intuitive geometry representation for technical models that are initially given as triangle meshes. For CAD-like models the defining features often coincide with the intersection between smooth surface patches. Our algorithm therefore first segments the input model into patches of constant curvature. The intersections between these patches are encoded through B\’ezier curves of adaptive degree, the patches enclosed by them are encoded by their (constant) mean and Gaussian curvatures. This sparse geometry representation enables intuitive understanding and editing by manipulating either the patches’ curvature values and/or the feature curves. During decoding/reconstruction we exploit remeshing and hence are independent of the underlying triangulation, such that besides the feature curve topology no additional connectivity information has to be stored. We also enforce discrete developability for patches with vanishing Gaussian curvature in order to obtain straight ruling lines.

10:00-10:30

Retrospect on 10 years of VCBM.

GCPR

11:00–12:30 GCPR Award and Talk

Chair: Reinhard Koch

11:00-12:30

Presentation of DAGM-GCPR Awards for YRF Best Master, Best Dissertation and German Pattern Recognition Award

11:00-12:30

Award Lecture: From 3D Scanning to Neural Rendering

VMV

11:00–12:30 Talks 1: Flow Visualization

Chair: Nils Rodrigues

11:00-11:45

Descriptions of motion are found everywhere in graphics, whether it is in computer animation, physics simulation, for optical flow, or in scientific visualization. The common denominator in all of the above is the mathematical language used to describe motion, namely differential equations. In this talk, we discuss how visualization can help us to analyze motion. We begin with a brief introduction into the mathematical modeling of trajectories and their visualization through phase portraits. We then see how optimizations can be used to lower the dimensionality of phase spaces. Afterwards, we move from dynamical systems containing point objects to the visualization of continuous fields in motion, such as fluids.

11:45-12:05

Finding static visual representations of time-varying phenomena is a standard problem in visualization. We are interested in unsteady flow data, i.e., we want to find a static visualization — one single still image — that shows as much of the global behavior of particle trajectories (path lines) as possible. We propose a new approach, which we call steadification: given a time-dependent flow field v, we construct a new steady vector field w such that the stream lines of w correspond to the path lines of v. With this, the temporal behavior of v can be visualized by using standard methods for steady vector field visualization. We present a formal description as a constraint optimization that can be mapped to finding a set cover, a NP-hard problem that is solved approximately and fairly efficiently by a greedy algorithm. As an application, we introduce the first 2D image-based flow visualization technique that shows the behavior of path lines in a static visualization, even if the path lines have a significantly different behavior than stream lines.

12:05-12:25

Trajectories of moving objects are of interest in multiple research fields ranging from geographic information science to behavioral science. Movement patterns of the studied object are often analyzed. Therefore, similar trajectories are retrieved which introduces the need for a similarity measure of trajectories. Similarity measures taken the shape of the trajectory into account are widely researched. Though, there are more attributes that can be relevant to distinguish different movements. One of them is the object orientation along the trajectory. The orientation is of interest in research fields where it influences the movement behavior like the impact of external forces in particles simulations. Trajectory retrieval taking particle orientation into account is still an open research question. Therefore, this work presents a similarity measure for trajectory retrieval considering the complex interaction of linear and rotational movement of particles. Furthermore, the similarity measure applies partial matching allowing for exploration of trajectory parts such as events that may occur along a trajectory tracked over a long time. The proposed algorithm is incorporated into an application for exploratory trajectory visualization.

VCBM

11:00–12:30 Talks 1: Feature Analysis

Chair: Anna Vilanova

11:00-11:25

Multi-shell diffusion MRI and Diffusion Spectrum Imaging are modern neuroimaging modalities that acquire diffusion weighted images at a high angular resolution, while also probing varying levels of diffusion weighting (b values). This yields large and intricate data for which very few interactive visualization techniques are currently available. We designed and implemented the first system that permits an interactive, iteratively refined classification of such data, which can serve as a foundation for isosurface visualizations and direct volume rendering. Our system leverages features learned by a Convolutional Neural Network. CNNs are state of the art for representation learning, but training them is too slow for interactive use. Therefore, we combine a computationally efficient random forest classifier with autoencoder based features that can be pre-computed by the CNN. Since features from existing CNN architectures are not suitable for this purpose, we design a specific dual-branch CNN architecture, and carefully evaluate our design decisions. We demonstrate that our approach produces more accurate classifications compared to learning with raw data, established domain-specific features, or PCA dimensionality reduction.

11:25-11:50

Frequency distributions (FD) are an important instrument when analyzing and investigating scientific data. In volumetric visualization, for example, frequency distributions visualized as histograms, often assist the user in the process of designing transfer function (TF) primitives. Yet a single point in the distribution can correspond to multiple features in the data, particularly in low-dimensional TFs that dominate time-critical domains such as health care. In this paper, we propose contributions to the area of medical volume data exploration, in particular Computed Tomography (CT) data, based on the decomposition of local frequency distributions (LFD). By considering the local neighborhood utilizing LFDs we can incorporate a measure for neighborhood similarity to differentiate features thereby enhancing the classification abilities of existing methods. This also allows us to link the attribute space of the histogram with the spatial properties of the data to improve the user experience and simplify the exploration step. We propose three approaches for data exploration which we illustrate with several visualization cases highlighting distinct features that are not identifiable when considering only the global frequency distribution. We demonstrate the power of the method on selected datasets.

11:50-12:30

Invited Talk: Bringing Deep Learning into clinical practice – Quantification of pediatric hydrocephalus

GCPR

14:00–15:30 Talks 1

Chair: Zeynep Akata

14:00-14:15

At the present time Optical Coherence Tomography (OCT) is among the most commonly used non-invasive imaging methods for the acquisition of large volumetric scans of human retinal tissues and vasculature. Due to tissue-dependent speckle noise, the elaboration of automated segmentation models has become an important task in the field of medical image processing. We propose a novel, purely data driven geometric approach to order-constrained 3D OCT retinal cell layer segmentation which takes as input data in any metric space. This makes it unbiased and therefore amenable for the detection of local anatomical changes of retinal tissue structure. To demonstrate robustness of the proposed approach we compare four different choices of features on a data set of manually annotated 3D OCT volumes of healthy human retina. The quality of computed segmentations is compared to the state of the art in terms of mean absolute error and Dice similarity coefficient.

14:15-14:30

Various hand-crafted features representations of bio-signals rely primarily on the amplitude or power of the signal in specific frequency bands. The phase component is often discarded as it is more sample specific, and thus more sensitive to noise, than the amplitude. However, in general, the phase component also carries information relevant to the underlying biological processes. In fact, in this paper we show the benefits of learning the coupling of both phase and amplitude components of a bio-signal. We do so by introducing a novel self-supervised learning task, which we call Phase-Swap, that detects if bio-signals have been obtained by merging the amplitude and phase from different sources. We show in our evaluation that neural networks trained on this task generalize better across subjects and recording sessions than their fully supervised counterpart.

14:30-14:37

Deep learning enables impressive performance in image recognition using large-scale artificially-balanced datasets. However, real-world datasets exhibit highly class-imbalanced distributions, yielding two main challenges: relative imbalance amongst the classes and data scarcity for mediumshot or fewshot classes. In this work, we address the problem of long-tailed recognition wherein the training set is highly imbalanced and the test set is kept balanced. Differently from existing paradigms relying on data-resampling, cost-sensitive learning, online hard example mining, loss objective reshaping, and/or memory-based modeling, we propose an ensemble of class-balanced experts technique that combines the strength of diverse classifiers. Our ensemble of class-balanced experts reaches results comparable to the state of the art and an extended ensemble establishes a new state-of-the-art on two benchmarks for long-tailed recognition. We conduct extensive experiments to analyse the performance of the ensembles, and discover that in modern datasets, relative imbalance is a harder problem than data scarcity. The training and evaluation code is available at https://github.com/ssfootball04/class-balanced-experts.

14:37-14:44

Intuitively, image classification should profit from using spatial information. Recent work, however, suggests that this might be overrated in standard CNNs. In this paper, we are pushing the envelope and aim to investigate the reliance on spatial information further. We propose to discard spatial information via shuffling locations or average pooling during both training and testing phases to investigate the impact on individual layers. Interestingly, we observe that spatial information can be deleted from later layers with small accuracy drops, which indicates spatial information at later layers is not necessary for good test accuracy. For example, the test accuracy of VGG-16 only drops by 0.03% and 2.66% with spatial information completely removed from the last 30% and 53% layers on CIFAR-100, respectively. Evaluation on several object recognition datasets with a wide range of CNN architectures shows an overall consistent pattern.

14:44-14:51

The training of deep-learning-based 3D object detectors requires large datasets with 3D bounding box labels for supervision that have to be generated by hand-labeling. We propose a network architecture and training procedure for learning monocular 3D object detection without 3D bounding box labels. By representing the objects as triangular meshes and employing differentiable shape rendering, we define loss functions based on depth maps, segmentation masks, and ego- and object-motion, which are generated by pre-trained, off-the-shelf networks. We evaluate the proposed algorithm on the real-world KITTI dataset and achieve promising performance in comparison to state-of-the-art methods requiring 3D bounding box labels for training and superior performance to conventional baseline methods.

14:51-15:30

Discussion

VMV

14:00–15:30 Talks 2: Segmentation and Matching

Chair: Martin Oswald

14:00-14:45

Video Object Segmentation (VOS) is the task of segmenting a set of objects in all the frames of a video. In the semi-supervised setting, the first frame mask of each object of interest is provided at test time. Many VOS approaches follow the one-shot principle and separately fine-tune a segmentation model to each object’s given mask. However, recent VOS methods refrain from such a test time optimization as it is considered to suffer from several shortcomings including a high test runtime. In this talk, I will present the efficient One-Shot Video Object Segmentation (e-OSVOS) framework. In contrast to most VOS approaches, e-OSVOS decouples the object detection task and only predicts local segmentation masks by applying a modified version of Mask R-CNN. The one-shot test runtime and performance are optimized without a laborious and handcrafted hyperparameter search. To this end, we meta learn the model initialization and learning rates for the test time optimization. We address the issue of degrading performance over the course of the sequence by continuously fine-tuning the model on previous mask predictions supported by a bounding box propagation. The state-of-the-art results of e-OSVOS will hopefully convince you to give one-shot finetuning methods another look.

14:45-15:05

We address the automatic segmentation of computer tomographic scans of ancient clay tablets with cuneiform inscriptions enclosed inside a clay envelope. Such separation of parts of similar material properties in the scan enables domain scientists tovirtually investigate the historically valuable artefacts by means of 3D visualization without physical destruction. We investigate two segmentation methods, the Priority-Flood algorithm and the Compact Watershed algorithm, the latter being modified by employing a distance metric that takes the ellipsoidal shape of the artefacts into account. Additionally, we propose a novel pre-segmentation method that suppresses the intensity values of the distance transform at contact points between clay envelope and tablet. We apply all methods to volumetric scans of a replicated clay tablet and analyze their performance under varying noise distributions. Evaluation by comparison to a manually segmented ground truth shows best results for the novel suppression-based approach.

15:05-15:25

We present a machine learning based and wavelet enhanced line feature descriptor for line feature matching. Therefor we trained a neural network to compute a descriptor for a line, given preprocessed information from the image area around the line. In the preprocessing step we utilize wavelets to extract meaningful information for the descriptor from the image. This process is inspired by the human vision system. We used the Unreal Engine 4 and multiple different freely available scenes to create our training data. We conducted the evaluation on ground truth labeled images of our own and from the Middlebury Stereo Dataset. To show the advancement of our method in terms of matching quality, we compare it to the Line Band Descriptor (LBD), to the Deep Learning Based Line Descriptor (DLD), which we used as a starting point for this work, and to the Learnable Line Segment Descriptor for Visual SLAM (LLD).

VCBM

14:00–15:30 Talks 2: VR Applications

Chair: Björn Sommer

14:00-14:15

This is a work in progress paper that describes a novel endoscope interface designed for use in an immersive virtual reality surgical simulator. We use an affordable off the shelf head mounted display to recreate the operating theatre environment. A hand held controller has been adapted so that it feels like the trainee is holding an endoscope controller with the same functionality. The simulator allows the endoscope shaft to be inserted into a virtual patient and pushed forward to a target position. The paper describes how we have built this surgical simulator with the intention of carrying out a full clinical study in the near future.

14:15-14:30

Personalized anatomical information of the heart is usually obtained from the visual analysis of patient-specific medical images with standard multiplanar reconstruction (MPR) of 2D orthogonal slices, volume rendering and surface mesh views. Commonly, medical data is visualized in 2D flat screens, thus hampering the understanding of 3D complex anatomical details, including incorrect depth/scaling perception, which is critical for some cardiac interventions such as medical device implantations. Virtual reality (VR) is becoming a valid complementary technology overcoming some of the limitations of conventional visualization techniques and allowing an enhanced and fully interactive exploration of human anatomy. In this work, we present VRIDAA, a VR-based platform for the visualization of patient-specific cardiac geometries and the virtual implantation of left atrial appendage occluder (LAAO) devices. It includes different visualization and interaction modes to jointly inspect 3D LA geometries and different LAAO devices, MPR 2D imaging slices, several landmarks and morphological parameters relevant to LAAO, among other functionalities. The platform was designed and tested by two interventional cardiologists and LAAO researchers, obtaining very positive user feedback about its potential, highlighting VRIDAA as a source of motivation for trainees and its usefulness to better understand the required surgical approach before the intervention.

14:30-14:45

The exploration of time-dependent measured or simulated blood flow is challenging due to the complex three-dimensional structure of vessels and blood flow patterns. Especially on a 2D screen, understanding their full shape and interacting with them is difficult. Critical regions do not always stand out in the visualization and may easily be missed without proper interaction and filtering techniques. The FlowLens [GNBP11] was introduced as a focus-and-context technique to explore one specific blood flow parameter in the context of other parameters for the purpose of treatment planning. With the recent availability of affordable VR glasses it is possible to adapt the concepts of the FlowLens into immersive VR and make them available to a broader group of users. Translating the concept of the Flow Lens to VR leads to a number of design decisions not only based around what functions to include, but also how they can be made available to the user. In this paper, we present a configurable focus-and-context visualization for the use with virtual reality headsets and controllers that allows users to freely explore blood flow data within a VR environment. The advantage of such a solution is the improved perception of the complex spatial structures that results from being surrounded by them instead of observing through a small screen.

14:45-15:00

We present a VR-based prototype for learning the hand anatomy. The prototype is designed to support embodied cognition, i.e., a learning process based on movements. The learner employs the prototype in VR by moving their own hand and fingers and observing how the virtual anatomical hand model mirrors this movement. The display of anatomical systems and their names can be adjusted. The prototype is deployed on the Oculus Quest and uses its native hand tracking capabilities to obtain the hand posture of the user. The potential of the prototype is shown with a small user study.

15:00-15:15

Specific phobias are among the most common mental diseases, affecting the lives of millions of people. Yet, many cases remain untreated and even undiagnosed, partly due to entry barriers such as waiting times and inconvenience of therapy. To improve the therapeutic options and convenience for the treatment of specific phobias, we implemented a virtual reality application for treating acrophobia (fear of heights) with in-virtuo exposure therapy. Our concept is based on principles from psychology and interaction design. This concept is then implemented using the game engine Unity and Oculus Rift headset as a target device for VR display. Our application has a wide range of customization options, which enables it to be personalized to individual patients. In addition, a number of motivational methods are integrated, which are intended to increase patient motivation, as motivation is essential for a successful therapy.

15:15-15:30

We introduce a Virtual Reality (VR) one-on-one tutoring system to support anatomy education. A student uses a fully immersive VR headset to explore the anatomy of the base of the human skull. A teacher guides the student by using the semi-immersive zSpace. Both systems are connected via network and each action is synchronized between both systems. The teacher is provided with various features to direct the student through the immersive learning experience. The teacher can influence the student’s navigation or provide annotations on the fly and hereby improve the student’s learning experience.

GCPR

16:00–17:30 Talks 2

Chair: Zeynep Akata

16:00-16:15

Deep neural networks have recently advanced the state-of-the-art in image compression and surpassed many traditional compression algorithms. The training of such networks involves carefully trading off entropy of the latent representation against reconstruction quality. The term quality crucially depends on the observer of the images which, in the vast majority of literature, is assumed to be human. In this paper, we aim to go beyond this notion of compression quality and look at human visual perception and image classification simultaneously. To that end, we use a family of loss functions that allows to optimize deep image compression depending on the observer and to interpolate between human perceived visual quality and classification accuracy, enabling a more unified view on image compression. Our extensive experiments show that using perceptual loss functions to train a compression system preserves classification accuracy much better than traditional codecs such as BPG without requiring retraining of classifiers on compressed images. For example, compressing ImageNet to 0.25 bpp reduces Inception-ResNet classification accuracy by only 2%. At the same time, when using a human friendly loss function, the same compression system achieves competitive performance in terms of MS-SSIM. By combining these two objective functions, we show that there is a pronounced trade-off in compression quality between the human visual system and classification accuracy.

16:15-16:30

TBA

16:30-16:37

With the success of deep learning methods in analyzing activities in videos, more attention has recently been focused towards anticipating future activities. However, most of the work on anticipation either analyzes a partially observed activity or predicts the next action class. Recently, new approaches have been proposed to extend the prediction horizon up to several minutes in the future and that anticipate a sequence of future activities including their durations. While these works decouple the semantic interpretation of the observed sequence from the anticipation task, we propose a framework for anticipating future activities directly from the features of the observed frames and train it in an end-to-end fashion. Furthermore, we introduce a cycle consistency loss over time by predicting the past activities given the predicted future. Our framework achieves state-of-the-art results on two datasets: the Breakfast dataset and 50Salads.

16:37-16:44

Segmenting objects of interest in an image is an essential building block of applications such as photo-editing and image analysis. Under interactive settings, one should achieve good segmentations while minimizing user input. Current deep learning-based interactive segmentation approaches use early fusion and incorporate user cues at the image input layer. Since segmentation CNNs have many layers, early fusion may weaken the influence of user interactions on the final prediction results. As such, we propose a new multi-stage guidance framework for interactive segmentation. By incorporating user cues at different stages of the network, we allow user interactions to impact the final segmentation output in a more direct way. Our proposed framework has a negligible increase in parameter count compared to early-fusion frameworks. We perform extensive experimentation on the standard interactive instance segmentation and one-click segmentation benchmarks and report state-of-the-art performance.

16:44-16:51

In computer vision research, the process of automating architecture engineering, Neural Architecture Search (NAS), has gained substantial interest. Due to the high computational costs, most recent approaches to NAS as well as the few available benchmarks only pro- vide limited search spaces. In this paper we propose a surrogate model for neural architecture performance prediction built upon Graph Neural Networks (GNN). We demonstrate the effectiveness of this surrogate model on neural architecture performance prediction for structurally un- known architectures (i.e. zero shot prediction) by evaluating the GNN on several experiments on the NAS-Bench-101 dataset.

16:51-17:30

Discussion

VMV

16:00–17:00 Talks 3: Practical Visualization

Chair: Andre Waschk

16:00-16:20

Virtual and mixed reality environments gain complexity due to the inclusion of multiple users and physical objects. A core challenge for developers and researchers while analyzing sessions from such environments lies in understanding the interaction between entities. Additionally, the raw data recorded from such sessions is difficult to analyze due to the simultaneous temporal and spatial changes of multiple entities. However, similar data has already been visualized in other areas of application. We analyze which aspects of these related visualizations can be leveraged for analyzing user sessions in virtual and mixed reality environments and describe a design and application space for such visualizations. First, we examine what information is typically generated in interactive virtual and mixed reality applications and how it can be analyzed through such visualizations. Next, we study visualizations from related research fields and derive seven visualization categories. These categories act as building blocks of the design space, which can be combined into specific visualization systems. We also discuss the application space for these visualizations in debugging and evaluation scenarios. We present two application examples that showcase how one can visualize virtual and mixed reality user sessions and derive useful insights from them.

16:20-16:40

While visualisation often strives for abstraction, the interactive exploration of large scientific data sets like densely sampled 3D fields or massive particle data sets still benefits from rendering their graphical representation in large detail on high-resolution displays such as Powerwalls or tiled display walls driven by multiple GPUs or even GPU clusters. Such visualisation systems are typically rather unique in their setup of hardware and software which makes transferring a visualisation application from one high-resolution system to another one a complicated task. As more and more such visualisation systems get installed, collaboration becomes desirable in the sense of sharing such a visualisation running on one site in real time with another high-resolution display on a remote site while at the same time communicating via video and audio. Since typical video conference solutions or web-based collaboration tools often cannot deal with resolutions exceeding 4K, with stereo displays or with multi-GPU setups, we designed and implemented a new system based on state-of-the-art hardware and software technologies to transmit high-resolution visualisations including video and audio streams via the internet to remote large displays and back. Our system architecture is built on efficient capturing, encoding and transmission of pixel streams and thus supports a multitude of configurations combining audio and video streams in a generic approach.

16:40-17:00

The analysis of Cultural Heritage (CH) artefacts is an important task in the Digital Humanities. Increasingly, rich CH artefact data comprising metadata of different modalities becomes available in digital libraries and research data repositories. However, the large amounts and heterogeneity of artefacts in these repositories compromise their accessibility for common domain analysis tasks, as domain researchers lack a structural overview of the spatial, temporal, and categorical traits of the artefacts in these collections. Still, researchers need to compare artefacts along different modalities, put them into context, and deal with possible uncertainties, subjectivities, or missing data. To date, many works support domain research via interactive visualisation. The majority relies primarily on visualisation of text and metadata including spatiotemporal, image and shape data. However, fewer consider these types of data in a tightly coupled way. We present an approach for tightly integrated multimodal visual exploration of large CH data collections along space, time and shape traits. Based on requirements obtained in collaboration with domain researchers, we introduce a set of interlinked views for exploration of said modalities. An appropriately defined approach automatically computes most significant correlations across different modalities, guiding the user towards detecting interesting artefact relationships. We apply our approach to pertinent archaeological data collections, and demonstrate that characteristic explorative tasks are effectively supported and domain-relevant artefact relations can be discovered.

VCBM

16:00–17:30 Poster & Image Contest

Chair: Fritz Lekschas, Gabriel Mistelbauer, Peter Mindek

16:10-17:30

Discord only: Analysis pipelines for RNA sequencing data often produce long lists of differentially expressed genes. For functional analysis, these lists can be analyzed using Gene Ontology (GO) (Ashburner et al., 2000) enrichment analysis tools such as PANTHER (Mi et al., 2019) and DAVID (Sherman et al., 2007) resulting in lists of enriched GO terms with associated p-values. Since GO terms are organized roughly hierarchical, an enrichment of a child term propagates to enrichments of parent terms which can lead to redundancy in the list. Moreover, when GO enrichments are computed for multiple experimental conditions, it can be of interest to compare and summarize the results. With our tool we introduce a technique to reduce the redundancy in multiple lists of GO terms and provide an interactive visualization for list comparisons. We developed a web application that takes multiple lists of GO terms or genes as input. For lists of genes GO term enrichment is computed using the PANTHER API resulting in lists of GO terms. The GO terms are clustered hierarchically using a version of the REVIGO algorithm adapted to use multiple lists of GO terms simultaneously (Supek et al., 2011). REVIGO clusters GO terms based on their semantic similarity, p-values, and relatedness resulting in a hierarchical clustering where less dispensable terms are placed closer to the root. The clustering is visualized in a clustered heatmap showing the p-values for each term. With sliders users can filter dispensable GO terms and select a cutoff for the extraction of non-hierarchical clusters resulting in a set of GO terms with reduced redundancy and a meaningful grouping. In order to compare the clusters a treemap is visualized for each condition. Moreover, the treemaps can be compared by animation which facilitates viewing changes betweeen conditions and bar charts for detailed comparisons of single terms. In order to provide a global overview, the overall similarity of the lists is visualized in a PCA plot and a correlation heatmap. A detailed table shows further information about the GO terms. This tool will enable researchers to compare the functional analysis of multiple experimental conditions. Moreover, it can be extended to compare GO enrichment results of any other application where gene lists are produced, such as the analysis of clusters in gene co-expression networks or the comparison of differentially expressed genes in multiple species.

16:10-17:30

Discord only: Sequence diagrams (SD) are a common way to visualize the amino acid sequence of proteins. Usually, not only the sequence is represented, but also other relevant information like binding sites or the secondary structure, if available. Although SD are often used in conjunction with 3D visualizations of the protein structure, they are also useful by themselves for visually analyzing protein data. SD are often visualized as static, precomputed images. Therefore, we present an interactive SD visualization that shows not only the attributes of the protein that are stored in the RCSB Protein Data Bank, but can also visualize additional information provided by external analysis tools. Furthermore, we try to enhance the basic idea of SDs by adding per-atom information instead of showing only per-amino-acid attributes. Figure 1 (left) shows our SD, which was implemented as an interactive, web-based visualization using the JavaScript library D3. Different attributes per amino acid are represented in the rows: the type of amino acid, the chain ID of the amino acid chain, the secondary structure, the BFactor, the hydrophobicity, the predicted intrinsic disorder, and the binding sites. Most of these attributes are encoded using colored rectangles. BFactor, hydrophobicity, and disorder prediction are quantitative attributes for which individual color gradients are used. The secondary structure is graphically depicted via different types of helices and arrows. Binding sites are encoded as colored rings. To provide users with more detailed information, we extended the idea of the classical SD that only shows per-amino-acid attributes. For per-atom attributes like the BFactor, our system computes the overall minimum and maximum values, and the average value per residue. Figure 1 (right) shows three different possible visualizations offered by our extended SD that show either the summary statistics or the individual values per atom. Our SD allows users to interactively hide attribute rows, change the number of amino acid per row, and it offers a tooltip with additional information if the user hovers an amino acid.

16:10-17:30

Discord only: Pangenome graphs built from raw sets of alignments may have complex structures which can introduce difficulty in downstream analyses, visualization, mapping, and interpretation. Graph sorting aims to find the best node order for a 1D and 2D layout to simplify these complex regions. Pangenome graphs embed linear pangenomic sequences as paths in the graph, but to our knowledge, no algorithm takes into account this biological information in the sorting. Moreover, existing 2D layout methods struggle to deal with large graphs. We present a new layout algorithm to simplify a pangenome graph, by using path-guided stochastic gradient descent to move a single pair of nodes at a time. We exemplify how the 1D path-guided SGD implementation is a key step in general pangenome analyses such as pangenome graph linearization and simplification.

16:10-17:30

Discord only: Molecular representations are taking an important role in communicating ideas, in generating new hypotheses on biological mechanisms and in analysing molecular simulations. However, the current devices used to observe and manipulate these molecular systems are typically limited to the two dimensions of the computer screen combined with a keyboard and a mouse offering limited interaction capabilities. Nowadays, virtual reality headsets offer a more performant and accessible solution. However, adaptations are necessary to fully benefit from the advantages of using virtual reality for scientific visualisation. This poster presents a few examples implemented with the UnityMol software. In addition to immediate applications in teaching, the paradigm shift in interaction and the increased depth perception and shape comprehension of biological molecules are already easing the grasp of these complex systems and will certainly lead to the discovery of new scientific knowledge.

17:30–19:00 Joint Keynote 1

Chair: Andreas Geiger

17:30-19:00

Towards Photorealism

Wednesday

JOINT

08:30–10:00 Joint Talks 2

Chair: Michael Krone

08:30-08:45

Camera calibration is a prerequisite for many computer vi-sion applications. While a good calibration can turn a camera into ameasurement device, it can also deteriorate a system’s performance ifnot done correctly. In the recent past, there have been great efforts tosimplify the calibration process. Yet, inspection and evaluation of cali-bration results typically still requires expert knowledge.In this work, we introduce two novel methods to capture the fundamen-tal error sources in camera calibration: systematic errors (biases) andremaining uncertainty (variance). Importantly, the proposed methodsdo not require capturing additional images and are independent of thecamera model. We evaluate the methods on simulated and real data anddemonstrate how a state-of-the-art system for guided calibration can beimproved. In combination, the methods allow novice users to performcamera calibration and verify both the accuracy and precision.

08:45-09:00

Video representation learning has recently attracted atten-tion in computer vision due to its applications for activity and sceneforecasting or vision-based planning and control. Video prediction mod-els often learn a latent representation of video which is encoded frominput frames and decoded back into images. Even when conditioned onactions, purely deep learning based architectures typically lack a phys-ically interpretable latent space. In this study, we use a differentiablephysics engine within an action-conditional video representation net-work to learn a physical latent representation. We propose supervisedand self-supervised learning methods to train our network and identifyphysical properties. The latter uses spatial transformers to decode phys-ical states back into images. The simulation scenarios in our experimentscomprise pushing, sliding and colliding objects, for which we also analyzethe observability of the physical properties. In experiments we demon-strate that our network can learn to encode images and identify physicalproperties like mass and friction from videos and action sequences in thesimulated scenarios. We evaluate the accuracy of our supervised and self-supervised methods and compare it with a system identification baselinewhich directly learns from state trajectories. We also demonstrate theability of our method to predict future video frames from input imagesand actions.

09:00-09:30

Challenges in set visualization include representing overlaps among sets, changes in their membership, and details of constituent elements. We present a visualization technique that addresses these challenges. The approach uses set intersection graphs that explicitly visualize each set intersection as a rectangular node and elements as circles inside them. We represent the graph as a layered node-link diagram using colors to indicate the sets. The layers reflect different levels of intersections, from the base sets in the lowest layer to potentially the intersection of all sets in the highest layer. We provide different perspectives to show temporal changes in set membership. Graphs for individual, two, and all timesteps are visualized in static, diff, and aggregated views. Together with linked views and filters, the technique supports the detailed exploration of dynamic set data. We demonstrate the effectiveness of the proposed approach by discussing two application examples. The submitted supplemental material contains a video showing proposed interactions in the implementation and the prototype itself.

09:30-10:00

We present a shape processing framework for visual exploration of cellular nuclear envelopes extracted from histology images. The framework is based on a novel shape descriptor of closed contours relying on a geodesically uniform resampling of discrete curves to allow for discrete differential geometry-based computation of unsigned curvature at vertices and edges. Our descriptor is, by design, invariant under translation, rotation and parameterization. Moreover, it additionally offers the option for uniform-scale-invariance. The optional scale-invariance is achieved by scaling features to z-scores, while invariance under parameterization shifts is achieved by using elliptic Fourier analysis (EFA) on the resulting curvature vectors. These invariant shape descriptors provide an embedding into a fixed-dimensional feature space that can be utilized for various applications: (i) as input features for deep and shallow learning techniques; (ii) as input for dimension reduction schemes for providing a visual reference for clustering collection of shapes. The capabilities of the proposed framework are demonstrated in the context of visual analysis and unsupervised classification of histology images.

10:00–10:30 Industry Talk: Amazon

Chair: Andreas Geiger

10:00-10:30

Autonomous Vision for Last Mile Delivery

GCPR

11:00–12:30 Talks 3

Chair: Zeynep Akata

11:00-11:15

High annotation costs are a major bottleneck for the training of semantic segmentation systems. Therefore, methods working with less annotation effort are of special interest. This paper studies the problem of semi-supervised semantic segmentation, that is only a small subset of the training images is annotated. In order to leverage the information present in the unlabeled images, we propose to learn a second task that is related to semantic segmentation but is easier. On labeled images, we learn latent classes consistent with semantic classes, in such a way that the variety of semantic classes assigned to a latent class is as low as possible. On unlabeled images, we predict a probability map for latent classes and use it as a supervision signal to learn semantic segmentation. Both latent and semantic classes are simultaneously predicted by a two-branch network. In our experiments on Pascal VOC 2012 and Cityscapes, we show that the latent classes learned this way have an intuitive meaning and that the proposed method achieves state-of-the-art results for semi-supervised semantic segmentation.

11:15-11:30

Sum-of-Squares polynomial normalizing flows have been proposed recently, without taking into account the geometry of the corresponding parameter space. We develop two gradient flows based on the geometry of the parameter space of the cone of SOS-polynomials. Few proof-of-concept experiments using non-Gaussian target distributions validate the computational approach and illustrate the expressiveness of SOS-polynomial normalizing flows.

11:30-11:37

We investigate a deep transfer learning methodology to perform water segmentation and water level prediction on river camera images. Starting from pre-trained segmentation networks that provided state-of-the-art results on general purpose semantic image segmentation datasets ADE20k and COCO-stuff, we show that we can apply transfer learning methods for semantic water segmentation. Our transfer learning approach improves the current segmentation results of two water segmentation datasets available in the literature. We also investigate the usage of the water segmentation networks in combination with on-site ground surveys to automate the process of water level estimation on river camera images. Our methodology has the potential to impact the study and modelling of flood-related events.

11:37-11:44

Modern neural networks can easily fit their training set perfectly. Surprisingly, despite being “overfit” in this way, they tend to generalize well to future data, thereby defying the classic bias–variance trade-off of machine learning theory. Of the many possible explanations, a prevalent one is that training by stochastic gradient descent (SGD) imposes an implicit bias that leads it to learn simple functions, and these simple functions generalize well. However, the specifics of this implicit bias are not well understood. In this work, we explore the “smoothness conjecture” which states that SGD is implicitly biased towards learning functions that are smooth. We propose several measures to formalize the intuitive notion of smoothness, and we conduct experiments to determine whether SGD indeed implicitly optimizes for these measures. Our findings rule out the possibility that smoothness measures based on first-order derivatives are being implicitly enforced. They are supportive, though, of the smoothness conjecture for measures based on second-order derivatives.

11:44-11:51

Context – i.e. information not contained in a particular measurement but in its spatial proximity – plays a vital role in the analysis of images in general and in the semantic segmentation of Polarimetric Synthetic Aperture Radar (PolSAR) images in particular. Nevertheless, a detailed study on whether context should be incorporated implicitly (e.g. by spatial features) or explicitly (by exploiting classifiers tailored towards image analysis) and to which degree contextual information has a positive influence on the final classification result is missing in the literature. In this paper we close this gap by using projection-based Random Forests that allow to use various degrees of local context without changing the overall properties of the classifier (i.e. its capacity). Results on two PolSAR data sets – one airborne over a rural area, one space-borne over a dense urban area – show that local context indeed has substantial influence on the achieved accuracy by reducing label noise and resolving ambiguities. However, increasing access to local context beyond a certain amount has a negative effect on the obtained semantic maps.

11:51-12:30

Discussion

11:00–12:30 Panel

Chair: Michael Sedlmair

11:00-12:30

Immersive Analytics

GCPR

14:00–15:30 Talks 4

Chair: Zeynep Akata

14:00-14:15

Prediction of trajectories such as that of pedestrians is crucial to the performance of autonomous agents. While previous works have leveraged conditional generative models like GANs and VAEs for learning the likely future trajectories, accurately modeling the dependency structure of these multimodal distributions, particularly over long time horizons remains challenging. Normalizing flow based generative models can model complex distributions admitting exact inference. These include variants with split coupling invertible transformations that are easier to parallelize compared to their autoregressive counterparts. To this end, we introduce a novel Haar wavelet based block autoregressive model leveraging split couplings, conditioned on coarse trajectories obtained from Haar wavelet based transformations at different levels of granularity. This yields an exact inference method that models trajectories at different spatio-temporal resolutions in a hierarchical manner. We illustrate the advantages of our approach for generating diverse and accurate trajectories on two real-world datasets – Stanford Drone and Intersection Drone.

14:15-14:30

Prediction of trajectories such as that of pedestrians is crucial to the performance of autonomous agents. While previous works have leveraged conditional generative models like GANs and VAEs for learning the likely future trajectories, accurately modeling the dependency structure of these multimodal distributions, particularly over long time horizons remains challenging. Normalizing flow based generative models can model complex distributions admitting exact inference. These include variants with split coupling invertible transformations that are easier to parallelize compared to their autoregressive counterparts. To this end, we introduce a novel Haar wavelet based block autoregressive model leveraging split couplings, conditioned on coarse trajectories obtained from Haar wavelet based transformations at different levels of granularity. This yields an exact inference method that models trajectories at different spatio-temporal resolutions in a hierarchical manner. We illustrate the advantages of our approach for generating diverse and accurate trajectories on two real-world datasets – Stanford Drone and Intersection Drone.

14:30-14:37

Safe feature-based vehicle localization requires correct and reliable association between detected and mapped localization landmarks. Incorrect feature associations result in faulty position estimates and risk integrity of vehicle localization. Depending on the number and kind of available localization landmarks, a guarantee for correct data association is difficult to give due to various ambiguities. In this work, a new data association approach is introduced for feature-based vehicle localization which relies on the extraction and use of unique geometric patterns of localization features. In a preprocessing step, the map is searched for unique patterns that are formed by localization landmarks. These are stored in a so called codebook, which is then used online for data association. By predetermining constellations that are unique in a given map section, an online guarantee for reliable data association can be given under certain assumptions on sensor faults. The approach is demonstrated on a map containing cylindrical objects which were extracted from LiDAR data. The evaluation of a localization drive of about 10 min using various codebooks both demonstrates the feasibility as well as limitations of the approach.

14:37-14:44

In this work we introduce a new Bounding-Box Free Network (BBFNet) for panoptic segmentation. Panoptic segmentation is an ideal problem for proposal-free methods as it already requires per-pixel semantic class labels. We use this observation to exploit class boundaries from off-the-shelf semantic segmentation networks and refine them to predict instance labels. Towards this goal BBFNet predicts coarse watershed levels and uses them to detect large instance candidates where boundaries are well defined. For smaller instances, whose boundaries are less reliable, BBFNet also predicts instance centers by means of Hough voting followed by mean-shift to reliably detect small objects. A novel triplet loss network helps merging fragmented instances while refining boundary pixels. Our approach is distinct from previous works in panoptic segmentation that rely on a combination of a semantic segmentation network with a computationally costly instance segmentation network based on bounding box proposals, such as Mask R-CNN, to guide the prediction of instance labels using a Mixture-of-Expert (MoE) approach. We benchmark our proposal-free method on Cityscapes and Microsoft COCO datasets and show competitive performance with other MoE based approaches while outperforming existing non-proposal based methods on the COCO dataset. We show the flexibility of our method using different semantic segmentation backbones and provide video results on challenging scenes in the wild in the supplementary material.

14:44-14:51

This work introduces a new proposal-free instance segmentation method that builds on single-instance segmentation masks predicted across the entire image in a sliding window style. In contrast to related approaches, our method concurrently predicts all masks, one for each pixel, and thus resolves any conflict jointly across the entire image. Specifically, predictions from overlapping masks are combined into edge weights of a signed graph that is subsequently partitioned to obtain all final instances concurrently. The result is a parameter-free method that is strongly robust to noise and prioritizes predictions with the highest consensus across overlapping masks. All masks are decoded from a low dimensional latent representation, which results in great memory savings strictly required for applications to large volumetric images. We test our method on the challenging CREMI 2016 neuron segmentation benchmark where it achieves competitive scores.

14:51-15:30

Discussion

VMV

14:00–15:30 Talks 4: Interactive Visualization

Chair: Jens Schneider

14:00-14:45

Our sense of sight allows us to take in the vast information of the world around us. We perceive visual input based on a mixture of salient and contextual features. Our eyes move to process the way these features draw our attention. This pattern of fixations and saccades is known as the scanpath and is reflective of tasks, expertise, and even emotion. Since scanpaths convey a multitude of cognitive aspects, scanpath comparison and machine learning approaches that use scanpaths provides models for many applications. Our research furthers work in robust scanpath analysis using machine learning methods and recently integrating deep learning for semantic understanding of a scene. This talk will first discuss the potential of efficient scanpath analysis for user modeling and provide an overview of state-of-the-art methodology for gaze behavior analysis coupled with scene semantics. Results and visualizations are based on challenging examples of user modeling from real-world tasks.

14:45-15:05

Modern atom probe tomography measurements generate large point clouds of atomic locations in solids. A common analysis task in these datasets is to put the location of specific atom types in relation to crystallographic features such as the interface between two crystals (grain boundaries). In cases where these features represent surfaces, their extraction is carried out manually in most cases. In this paper we propose a method for semi automatic extraction of such two dimensional manifold and non-manifold surfaces from a given dataset. We first aid the user to filter the atom data by providing an interactive visualization of the dataset tailored towards enhancing these interfaces. Once a desired set of points representing the interface is found, we provide an automatic surface extraction method to compute an explicit parametric representation of the visualized surface. In case of non-manifold interface structures, this parametric representation is then used to calculate the intersections of the individual manifold parts of the interfaces.

15:05-15:25

Reliable component design is one of structural mechanics’ main objectives. Especially for lightweight constructions, hybrid parts made of a multi-material combination are used. The design process for these parts often becomes very challenging. The critical section of such hybrid parts is usually the interface layer between the different materials that often turns out to be the weakest zone. In this paper, we study a hybrid part made of metal and carbon fiber-reinforced composite, where the metal insert is coated by a thermoplastic to decrease the jump in stiffness between the two primary structural materials metal and composite. To prevent stress peaks in small volumes of the part, mechanical engineers aim to design functional elements at the thermoplastic interface, to homogenize the stress distribution. The placement of such load transmitting functional elements at the thermoplastics interface has a crucial impact on the overall stability and mechanical performance of the design. Resulting from this, mechanical engineers acquire large amounts of simulations outputting multi-field datasets, to examine the impact of differently designed load transmitting elements, their number, and positioning in the interface between metal and composite. In order to assist mechanical engineers in deeper exploration of the often numerous set of simulations, a framework based on visual analytics techniques was developed in close collaboration with engineers. To match their needs, a requirement analysis was performed beforehand, and visualizations were discussed steadily. We show how the presented framework helps engineers gaining novel insights to optimize the hybrid component based on the selected load transmitting elements.

VCBM

14:00–15:30 Talks 3: VA and Uncertainty

Chair: Helwig Hauser

14:00-14:25

We present an approach for visual analysis of high-dimensional measurement data with varying sampling rates in the context of an experimental post-surgery study performed on a porcine surrogate model. The study aimed at identifying parameters suitable for diagnosing and prognosticating the volume state—a crucial and difficult task in intensive care medicine. In intensive care, most assessments not only depend on a single measurement but a plethora of mixed measurements over time. Even for trained experts, efficient and accurate analysis of such multivariate time-dependent data remains a challenging task. We present a linked-view post hoc visual analysis application that reduces data complexity by combining projection-based time curves for overview with small multiples for details on demand. Our approach supports not only the analysis of individual patients but also the analysis of ensembles by adapting existing techniques using normalization and non-parametric statistics. We evaluated the effectiveness and acceptance of our application through expert feedback with domain scientists from the surgical department using real-world data: the results show that our approach allows for detailed analysis of changes in patient state while also summarizing the temporal development of the overall condition. Furthermore, the medical experts believe that our method can be transferred from medical research to the clinical context, for example, to identify the early onset of a sepsis.

14:25-14:50

Deep learning is increasingly used in the field of glaucoma research. Although deep learning (DL) models can achieve high accuracy, issues with trust, interpretability, and practical utility form barriers to adoption in clinical practice. In this study, we explore whether and how visualizations of deep learning-based measurements can be used for glaucoma management in the clinic. Through iterative design sessions with ophthalmologists, vision researchers, and manufacturers of optical coherence tomography (OCT) instruments, we distilled four main tasks, and designed a visualization tool that incorporates a visual field (VF) prediction model to provide clinical decision support in managing glaucoma progression. The tasks are: (1) assess reliability of a prediction, (2) understand why the model made a prediction, (3) alert to features that are relevant, and (4) guide future scheduling of VFs. Our approach is novel in that it considers utility of the system in a clinical context where time is limited. With use cases and a pilot user study, we demonstrate that our approach can aid clinicians in clinical management decisions and obtain appropriate trust in the system. Taken together, our work shows how visual explanations of automated methods can augment clinicians’ knowledge and calibrate their trust in DL-based measurements during clinical decision making.

14:50-15:05

A brain lesion is an area of tissue that has been damaged through injury or disease. Its analysis is an essential task for medical researchers to understand diseases and find proper treatments. In this context, visualization approaches became an important tool to locate, quantify, and analyze brain lesions. Unfortunately, image uncertainty highly effects the accuracy of the visualization output. These effects are not covered well in existing approaches, leading to miss-interpretation or a lack of trust in the analysis result. In this work, we present an uncertainty-aware visualization pipeline especially designed for brain lesions. Our method is based on an uncertainty measure for image data that forms the input of an uncertainty-aware segmentation approach. Here, medical doctors can determine the lesion in the patient’s brain and the result can be visualized by an uncertainty-aware geometry rendering. We applied our approach to two patient datasets to review the lesions. Our results indicate increased knowledge discovery in brain lesion analysis that provides a quantification of trust in the generated results.

15:05-15:30

Clinical Decision Support Systems (CDSS) provide assistance to physicians in clinical decision-making. Based on patient-specific evidence items triggering the inferencing process, such as examination findings, and expert-modeled or machine-learned clinical knowledge, these systems provide recommendations in finding the right diagnosis or the optimal therapy. The acceptance of, and the trust in, a CDSS are highly dependent on the transparency of the recommendation’s generation. Physicians must know both the key influences leading to a specific recommendation and the contradictory facts. They must also be aware of the certainty of a recommendation and its potential alternatives. We present a glyph-based, interactive multiple views approach to explainable computerized clinical decision support. Four linked views (1) provide a visual summary of all evidence items and their relevance for the computation result, (2) present linked textual information, such as clinical guidelines or therapy details, (3) show the certainty of the computation result, which includes the recommendation and a set of clinical scores, stagings, etc., and (4) facilitate a guided investigation of the reasoning behind the recommendation generation as well as convey the effect of updated evidence items. We demonstrate our approach for a CDSS based on a causal Bayesian network representing the therapy of laryngeal cancer. The approach has been developed in close collaboration with physicians, and was assessed by six expert otolaryngologists as being tailored to physicians’ needs in understanding a CDSS.

16:00–17:00 Joint Keynote 2

Chair: Hendrik Lensch

16:00-17:00

Recent progress in generative models and particularly generative adversarial networks (GANs) has been remarkable. They have been shown to excel at image synthesis as well as image-to-image translation problems. I will present a number of our recent methods in this space, which, for instance, can translate images from one domain (e.g., day time) to another domain (e.g., night time) in an unsupervised fashion, synthesize completely new images, and even learn to turn label masks into realistic images.

GCPR

17:00–18:00 DAGM-GCPR General Assembly

Chair: Reinhard Koch

VMV

17:00–18:00 GI-GDV: General Assembly

Thursday

GCPR

08:30–10:00 Talks 5

Chair: Torsten Sattler

08:30-08:45

We address the problem of discovering part segmentations of articulated objects without supervision. In contrast to keypoints, part segmentations provide information about part localizations on the level of individual pixels. Capturing both locations and semantics, they are an attractive target for supervised learning approaches. However, large annotation costs limit the scalability of supervised algorithms to other object categories than humans. Unsupervised approaches potentially allow to use much more data at a lower cost. Most existing unsupervised approaches focus on learning abstract representations to be refined with supervision into the final representation. Our approach leverages a generative model consisting of two disentangled representations for an object’s shape and appearance and a latent variable for the part segmentation. From a single image, the trained model infers a semantic part segmentation map. In experiments, we compare our approach to previous state-of-the-art approaches and observe significant gains in segmentation accuracy and shape consistency. Our work demonstrates the feasibility to discover semantic part segmentations without supervision.

08:45-09:00

We study the lifted multicut problem restricted to trees, which is np-hard in general and solvable in polynomial time for paths. In particular, we characterize facets of the lifted multicut polytope for trees defined by the inequalities of a canonical relaxation. Moreover, we present an additional class of inequalities associated with paths that are facet-defining. Taken together, our facets yield a complete totally dual integral description of the lifted multicut polytope for paths. This description establishes a connection to the combinatorial properties of alternative formulations such as sequential set partitioning.

09:00-09:07

We introduce a new architecture called a conditional invertible neural network (cINN), and use it to address the task of diverse image-to-image translation for natural images. This is not easily possible with existing INN models due to some fundamental limitations. The cINN combines the purely generative INN model with an unconstrained feed-forward network, which efficiently pre-processes the conditioning image into maximally informative features. All parameters of a cINN are jointly optimized with a stable, maximum likelihood-based training procedure. Even though INN-based models have received far less attention in the literature than GANs, they have been shown to have some remarkable properties absent in GANs, e.g. apparent immunity to mode collapse. We find that our cINNs leverage these properties for image-to-image translation, demonstrated on day to night translation and image colorization. Furthermore, we take advantage of our bidirectional cINN architecture to explore and manipulate emergent properties of the latent space, such as changing the image style in an intuitive way. Code: https://github.com/VLL-HD/conditional_INNs

09:07-09:14

A regular convolution layer applying a filter in the same way over known and unknown areas causes visual artifacts in the inpainted image. Several studies address this issue with feature re-normalization on the output of the convolution. However, these models use a significant amount of learnable parameters for feature re-normalization, or assume a binary representation of the certainty of an output. We propose (layer-wise) feature imputation of the missing input values to a convolution. In contrast to learned feature re-normalization, our method is efficient and introduces a minimal number of parameters. Furthermore, we propose a revised gradient penalty for image inpainting, and a novel GAN architecture trained exclusively on adversarial loss. Our quantitative evaluation on the FDF dataset reflects that our revised gradient penalty and alternative convolution improves generated image quality significantly. We present comparisons on CelebA-HQ and Places2 to current state-of-the-art to validate our model.

09:14-09:21